Hey, maybe no one will notice that it broke?

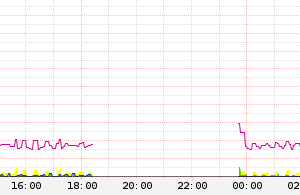

It was no black swan event that brought down a big chunk of Hurricane Electric’s data center #2 in Fremont last weekend. Instead, it was an easily foreseeable malfunction that should have been taken into account when the center was designed. According to a postmortem report posted by Linode – the company primarily affected by the outage – when PG&E’s line went out at the facility, a bad battery kept backup power from kicking in…

Seven of the facility’s eight generators started correctly and provided uninterrupted power. Unfortunately, one generator experienced an electromechanical failure and failed to start. This caused an outage which affected our entire deployment in Fremont….

The maintenance vendor for the generator dispatched a technician to the datacenter and it was determined that a battery used for starting the generator failed under load. The batteries were subsequently replaced by the technician. The generators are tested monthly, and the failed generator passed all of its checks two weeks prior to the outage. It was also tested under load earlier in the month.

For the next four and half hours, the best anyone could do was wait for PG&E to restore service.

Batteries go bad and often at the worst possible time. And that’s not the only reason a generator might fail. Not designing the system with sufficient excess capacity and load balancing/sharing capability is inexcusable. Sitting around helpless when the inevitable happens is pathetic.

Hurricane Electric hasn’t made any public statements about the incident – at least not that I can find. I’d love to hear their side of it. If a telecoms company wants to rank in the top tier, it needs to take ownership of the problem when a major outage hits. That means having the technical resources available to fix it and communicating effectively and openly about it with the public and not just its immediate customers.